|

|

Free Seo Tools | Best Free SEO Tools (100% Free)

|

The optimisation of search engine (SEO) is the method of optimizing

website traffic efficiency and quantity on the website or the search engine

Home page. SEO strives not for traffic specifically charged, but for

the non-payment of traffic (called 'normal' or 'organic' results). Different

types of searches, such as image search, video search, academic search, news

search and industry specialized verticals may result in unpaid traffic.

SEO looks for the role of search engines, computer-programmed

algorithms dictating search engine behavi our, search criteria or keywords in

search engines, as an internet marketing technique,and which search engines

the intended audience likes. SEO is achieved when a website gets more

visits from a search engine as pages are higher on the results list of the

search engine (SERP). This tourists can be turned into customers.

Relationship with Google:

In 1998, Larry Page and Sergey Brin, two graduate students in Stanford

University, created the "Backrub" search engine, a statistical algorithm

used to determine the popularity of webpages. PageRank, the number

determined by the algorithm, is based on the sum and strength of the inbound

connections. The chance of visiting a given website by a web user who

randomly navigates the web and follows links from one side to the other is

determined by PageRank. This implies, in essence, that certain connections

are better than others, so the random web surfer is more likely to hit a

higher PageRank list.

Google was founded by Page and Brin in 1998. The increasing number of

internet users, who enjoyed the easy nature of Google, have been handled

loyally by Google[22] Factors off-page (e.g. Page Rank and hyperlink

analysis) as well as factors on-pages (e.g. Keyword frequency, meta tags,

headings, links and site structure) to allow Google to prevent search engine

abuse that only took account of factors on-page. Though PageRank was harder

to play, it was already possible for webmasters to create connection

building tools and systems to influence the Inktomi search engine.

Many websites were based on the trade, acquisition and sale of links. Any

of the schemes included the construction of thousands of websites

exclusively for the purpose of linking spam.

Getting indexed:

The biggest search engines include Google, Bing and Yahoo! To find

algorithm search results, use crawlers. Pages linked from other indexed

pages of the search engine are not needed because they are automatically

identified. The Yahoo! Directory and DMOZ, two big 2014 and 2017 closing

directors, needs both manual submission and human editorial review.

Google provides the Google search console to build and free XML sitemap

feeds to ensure that all sites are found, in particular pages that

cannot be automatically found by links. Besides their URL console for

submitting.

Yahoo! used to run a paid-in insertion service which promised a

per-click crawling, but this was stopped in 2009.

When crawling on a site search engine crawlers can look at a variety

of factors. Search engines are not indexed on any list. The exclusion

of pages from a site's root directory will also lead to whether pages

crawl or not.

Today, most users use a smart computer to search on Google. Google

revealed in November 2016 a big improvements in the way websites crawl

and began their smartphone index first.

That means that the mobile edition of a given website is the basis for

Google's index. The new Chromium edition of the railroad was revised

by Google in May 2019. (74 at the time of the announcement). Google

confirmed that the Chromium rendering engine will be periodically

upgraded to the new edition. In December 2019, Google started

upgrading its crawler's User-Agent string to represent Chrome's most

recent iteration of its rendering service. The delay required

webmasters to upgrade their code that replied to such bot strings.

Google has performed tests and is sure of the impact.

Preventing crawling:

|

|

Preventing crawling

|

Webmasters cannot order spiders to crack such files or folders via

the robots.txt standard file in the domain root directory to prevent

unnecessary contents in search indexes. In addition, the use of a

particular metag for robotics will expressly remove a website from

the index of a search engine (usually ). The robots.txt in the root

directory is the first file to be crept as a search engine enters a

site. The robots.txt file is parsed and the robot is told to not

scroll pages. As a search engine crawler, this file can be cached,A

webmaster might often not like to crawl the pages of the crawl. In

addition, log-in websites, such as shopping carts and material

unique to the customers, contain the search results of internal

searches. Pages are not crawled. Google warned webmasters in March

2007 that they should stop indexing internal search results because

such sites are deemed spamming.

Best Free SEO Tools (100% Free):

1. Bing Webmaster Tools

Although Google Webmaster Tools has every glory, people forget

that Bing Webmaster has a complete search review and website.

Keyword papers, keyword analysis and crawling knowledge are

especially useful.

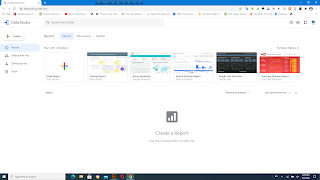

2. Data Studio:

If data from multiple channels (search consoles and Google

Analytics, etc) are to be combined and presented, so it is the

comfort zone of Google Data Studio. Check these Google Data Studio

Tools from Lee Hurst for an understanding of all the SEO tasks and

dashboards that you can create for free.

Get it: Data Studio

3. Enhanced Google Analytics Annotations:

How do you know if your dip (or rise) in traffic is related with

an upgrade of the Google Algorithm? This Google Chrome extension

highly recommended overlays additional data on top of the metrics,

allowing you to conveniently send consumer screenshots that

display just how external factors affect traffic.

The great kahuna and web analytics kit that is most used on earth.

Google Analytics, which is free, is surprisingly powerful and

integrates well with other Google products such as Refine, Search

Console and Data Studio. Some users are concerned in GA's privacy

— even though Google does not use these data for search rankings.

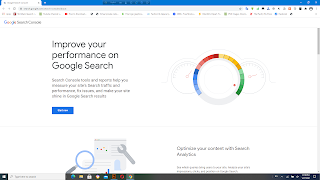

Perhaps the most valuable free SEO platform in this entire list,

without access to data inside Google's Search Console, it is hard

to imagine using modern SEO. This is the most reliable way to

discover how Google rates and ranks the website and is one of the

few ways to get trustworthy keywords. Search Database restricts

downloads to 1000 rows so that you can download up to 25,000 rows

at a time by bookmarking the free Console Data Explorer.

Did anybody tell (not given)? Keyword Hero works with

sophisticated mathematical and machine learning to solve the

problem of lost keyword info. This isn't a seamless method, but

data can be a helpful step in the right direction for those who

fail to align keywords with conversion and other metrics. Up to

2000 sessions/month is free of charge.

Dr Pete's brainchild and the original Google SERP tracker is the

algorithm tracker whether a major change has been made, or not.

MozCast is not the same. SERP monitoring capabilities are also

helpful, revealing that advertising and information panels are

popular in their features.

free seo checker

free seo tools 2020

free seo tools for youtube

free keyword research tool

free seo tools for blogger

google seo tools

seo tools list

top seo tools